Workable Assessments: The science behind it all

Let’s admit it – while you’re the expert in your own field (whether that’s recruitment or another function in your company), that doesn’t always mean you’re also an expert on assessing candidates’ personalities and cognitive capabilities.

Nor should you be. Due to their abstract nature, personalities and cognitive abilities are not as easy to assess as, say, a candidate’s coding skills or the ability to close a lucrative sales deal.

But even for the most abstract tests, there are still best practices that can ensure you can learn what you need to know about a candidate.

Enter science and technology. Since you’re digitally transforming your entire recruitment process, it makes sense that you can and should digitize your assessments as well. Plus, you want to have a standardized process like in every other stage in the recruitment process.

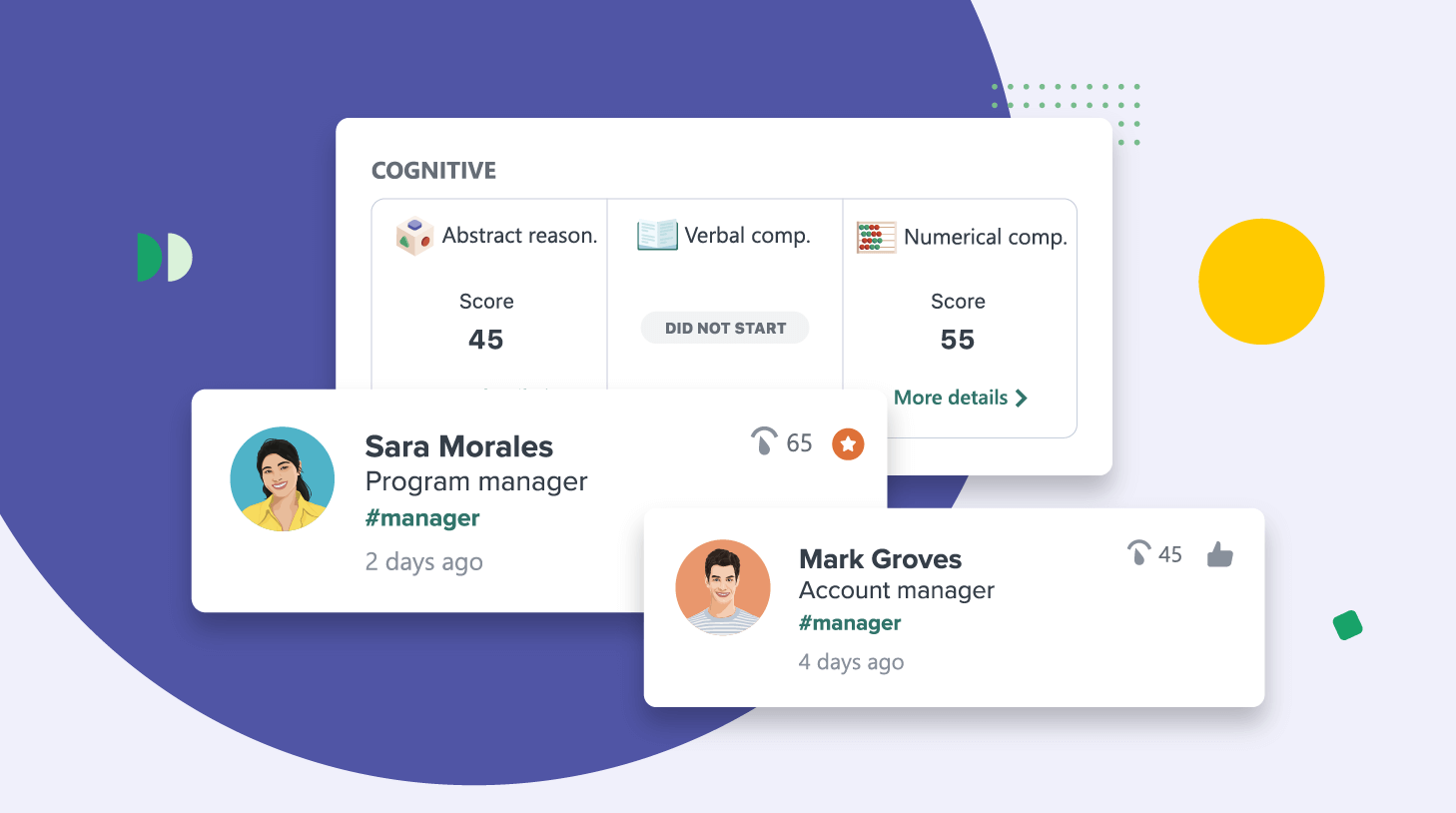

Workable’s cognitive and personality assessments are specifically designed to fit in seamlessly within that environment to ensure you make the right hire for your business. There are five areas where you can test candidates:

- Workplace personality

- Abstract reasoning

- Verbal comprehension

- Numerical comprehension

- Attention & Focus

The first of these, of course, is the personality assessment, while the other four are for assessing cognitive abilities.

These tests weren’t designed on a whim. A great deal of background and research – including input from international psychology experts – have gone into the design and development of these tests.

We know you want to ensure these assessments are valid and rooted in science before using them in your recruitment process, so we’ll give you some quick highlights to save you the time of researching the deeper material. Once you understand the science, you can then think about how you might include them in your process – please see our resources at the end that can help you with that.

Personality test science and methodology

Workable’s personality assessment is deeply rooted in scientific methods, and is built on the Five Factor model of personality testing accepted in psychology circles worldwide as the de facto model of personality.

The five factors come from statistical analysis of the adjectives used to describe people across five dimensions – and have been translated to many languages and cultures. They are:

- Agreeableness

- Conscientiousness

- Extraversion

- Openness To Experience

- Emotional Stability (or Neuroticism)

These factors are sometimes referred to as the acronyms OCEAN or CANOE. They’re not based on an either-or measurement, but rather, on where a test-taker places on a scale or continuum. It’s been scientifically linked to predictors around health, education and on-the-job behaviors, which makes it a valuable test to evaluate candidates for a role.

Workable’s version of the Five Factor model goes deeper, analyzing 16 additional areas that are selected and modified to be most relevant in the selection process. Workable’s personality assessments have also been analyzed for consistency using Cronbach’s alpha testing and other indexes. For some quick context, this is a common statistical analysis designed to ensure the validity of Likert scale surveys (i.e. sliding-scale or continuum surveys).

Many questions have been adopted from the public domain International Personality Item Pool (IPIP), which have contributed to questionnaires in more than 850 published studies. The IPIP scales have demonstrated high internal consistency metrics along with strong correlations with the scales of many established personality questionnaires. (You can examine the comparisons here.) This further validates many of the scales we measure in our personality assessments.

Cognitive test science and methodology

Professionally designed by testing experts at Psycholate, Workable’s cognitive assessments are likewise modeled after common cognitive tests in the industry.

The verbal and numerical comprehension question sets have been professionally designed by testing experts, and cross-checked by psychologists with a background in psychometrics to ensure validity and accuracy. Other tests were also performed including the above-mentioned IRT testing and parameter estimation, and items that met the criteria were added to the abstract reasoning and attention & focus question banks.

Meanwhile, for the abstract reasoning and attention & focus assessment tests, multiple prototypes were created and tested, contributing to the final version that a candidate sees when they take these tests. Again, these tests were professionally tested and reviewed by psychology experts to ensure each test meets psychometric and industry standards of quality.

Cronbach’s alpha testing was also applied during design and pilot stages.

Real-time scoring, adaptability and length

These tests are not simply scored via ‘correct’ and ‘incorrect’ answers. Rather, they utilize the universally recognized Item Response Theory (IRT) framework. It uses complex statistical algorithms with each question having a measured level of difficulty and quality metric based on historical data.

Scoring for all four cognitive assessment tests is conducted using the Expected A Posteriori (EAP), which allows for real-time scoring of the candidate with each question answered, and updating upcoming questions based on that scoring. This means the next question is presented based on previous answers.

An adaptive test will estimate the candidate’s ability after every new response based on answers up to that point. It will then adjust and display the most suitable or relevant question to match that just-estimated ability level. As a result, an adaptive test is shorter because it only asks the questions that are needed, without burdening the candidate with potentially irrelevant questions.

Also, unlike preconfigured tests with a set number of questions, an adaptive test decides when to stop based on answers received up to that point. IRT scoring offers not only a score for the ability of a candidate; our assessments can also calculate the positive or negative quality of this score via the Standard Error of measurement (SEm).

Obviously, a score based on more items is more reliable. So Workable’s adaptive tests will stop once a candidate’s score reaches a satisfactory and measurable level (i.e. a low enough SEm) or when a maximum number of questions (for example, 20) has been reached in order to protect the test content.

This all ultimately means that each individual candidate will experience a different and unique set of questions. Along with other measures, this ultimately assures test security: It’s practically impossible to replicate the exact same test or create a cheat sheet to assist in ‘passing’ a test.

There are safeguards in place to ensure that the same question isn’t asked twice in the same assessment.

Want to learn more?

This is, of course, just a summarization of the main science and methodology that have provided the foundation on which Workable Assessments is built. Now that you have a high-level understanding of the science behind the tests and what they’re testing for, it’s now time to think about how you might incorporate that as an essential tool in your hiring toolbox.

If you’re interested in learning more about our assessments feature – or even taking it for a test drive – don’t hesitate to reach out to us.

Finally, if you’d like to learn more about some of the concerns around assessments from a candidate’s perspective and why you needn’t worry, we cover that in this article. Meanwhile, here’s a tutorial on how to conduct a post-personality assessment interview.