Compliance in AI for recruitment

Generative AI lets recruiters efficiently handle large volumes of applicants and pay more individual attention to each candidate, but the technology is very new and not yet well understood.

Using AI in your hiring process can be a boon for your recruiting teams. but it requires strict adherence to legal and ethical standards to stay compliant and maintain candidate trust.

Regulations like the General Data Protection Regulation (GDPR) and Equal Employment Opportunity Commission (EEOC) guidelines play a key role in ensuring the safe and ethical use of AI technology. There are things you can do as an HR leader to make your AI-assisted recruitment processes fair, transparent, and accountable.

This post will show you the best practices to follow in compliance with AI for your recruitment processes. Then, you can successfully manage these complexities and make your recruitment practices better than ever.

Contents

What regulations govern the use of AI in recruitment?

Generative AI is a very new technology that is advancing rapidly. Government agencies are still working to keep up with them and make laws and regulations that mitigate the potential risks and dangers.

As an HR leader, there are a few legal standards to be aware of as you implement AI into your HR workflows. Understanding these regulations will help your organization use AI responsibly and ethically.

General Data Protection Regulation (GDPR)

The General Data Protection Regulation (GDPR) maintains strict data privacy guidelines across the European Union. Make sure that your AI systems are in line with GDPR requirements, especially when it comes to personal data.

Equal Employment Opportunity Commission (EEOC)

The EEOC enforces laws targeting workplace discrimination based on protected characteristics like race, religion, and sexual orientation. AI in recruitment should be designed to prevent bias and discrimination. Conduct regular bias audits to ensure your AI adoption is EEOC compliant.

Emerging AI-Specific Regulations

The 2024 European Union AI Act aims to classify AI systems based on their risk levels and impose strict regulations on the use of high-risk AI applications.

The upcoming U.S. AI Bill of Rights also seeks to establish guidelines for the use of AI technology. The proposed bill seeks to protect individuals from the potential risk of harm AI poses and ensure that its use is fair, transparent, and accountable.

Reducing bias and improving fairness in AI for recruiting

You can’t predict how the laws and regulations regarding the use of AI will change and shift as the technology becomes more prevalent. The best thing you can do as an HR leader is to prioritize the data privacy of your candidates. Mishandling their data can put you in legal trouble and damage your organization’s brand reputation.

There are a few best practices to keep in mind when navigating the legal challenges of using AI for recruitment.

- Encrypt data to prevent unauthorized access

- Implement access controls and limit who can view and edit your data

- Regularly audit your AI usage to ensure compliance and security

- Anonymize your candidate data to reduce bias

As an HR professional, it’s up to you to oversee that your AI usage aligns with ethical standards and maintain fairness and accountability in the recruitment process.

Be transparent about how and why you use AI in your recruiting practices. That builds trust with your candidates and stakeholders and creates a foundation of confidence and trust in the technology. Here’s how you can help build that foundation of trust and transparency.

- Assign specific team members to oversee your AI usage

- Make detailed documentation of your AI decision-making

- Implement a process for human oversight of AI recommendations

- Train your employees regularly on ethical AI usage and standards

Best compliance practices in AI for recruiting

Reducing bias in your AI-driven hiring process isn’t just a legal requirement, it’s good business practice and the right thing to do. AI itself can be involved in the process of making your AI compliance procedures, identifying potential bias in your recruitment processes, and ensuring you stay on top of the latest legal standards.

Fair and ethical AI systems can and should be used to promote diversity and inclusion in the workplace and create more transparent hiring practices.

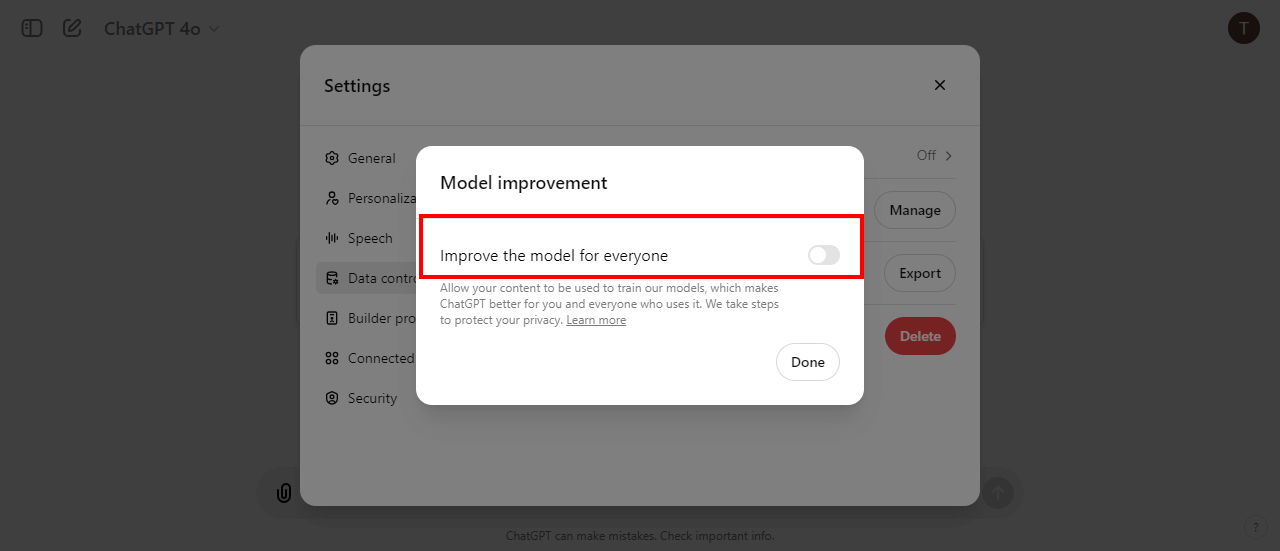

Turn off Open AI data collection

You can use AI in your hiring process without allowing ChatGPT to collect your data for training purposes. Turning off this feature can minimize the risk of compromising your candidate’s data privacy if you use ChatGPT for resume screening.

To turn off ChatGPT data collection:

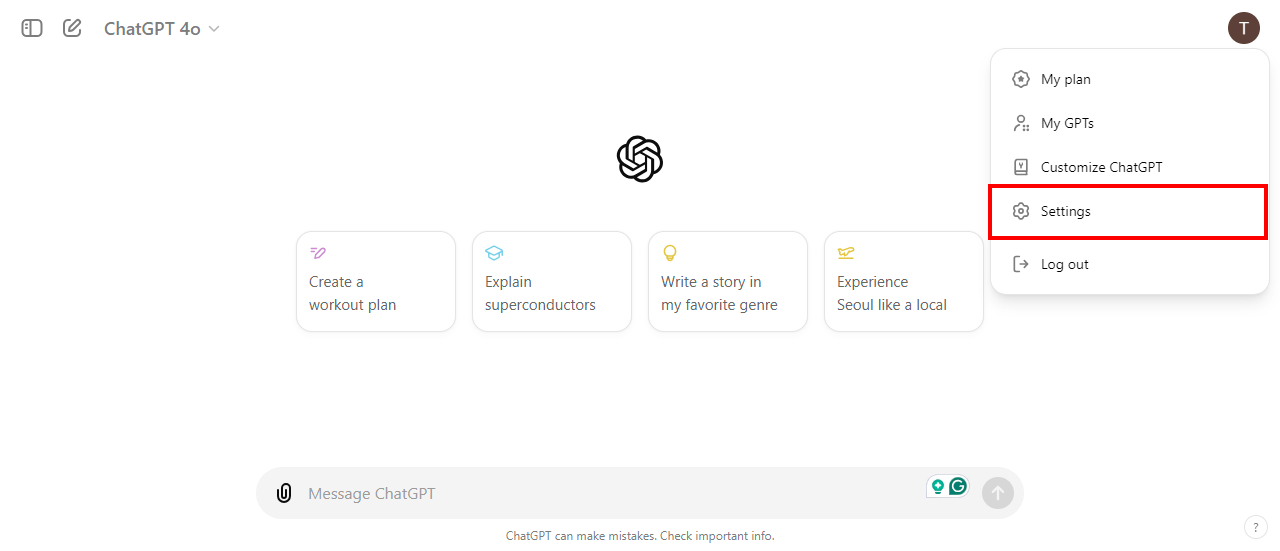

- Click your profile picture in the top-right corner of the ChatGPT screen

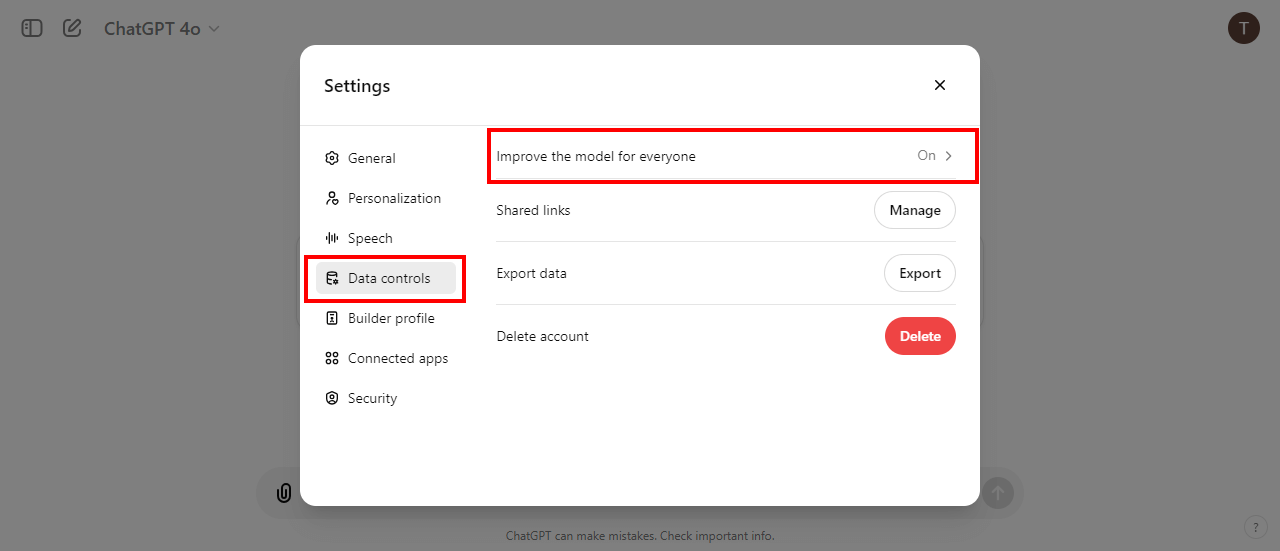

- Go to settings, data controls, and improve the model for everyone

- Toggle improve the model for everyone off. This will disable ChatGPT’s ability to use your content to train OpenAI models

Regular bias audits

Use training datasets that include candidates from a spectrum of backgrounds, demographics, and work experiences. Update and review that training data regularly to reflect changes in the job market and societal expectations of AI use.

Those audits can identify biases that may have been introduced during the development of AI models. Regularly updating AI models with diverse and representative datasets can minimize bias and promote fairness in your hiring practices.

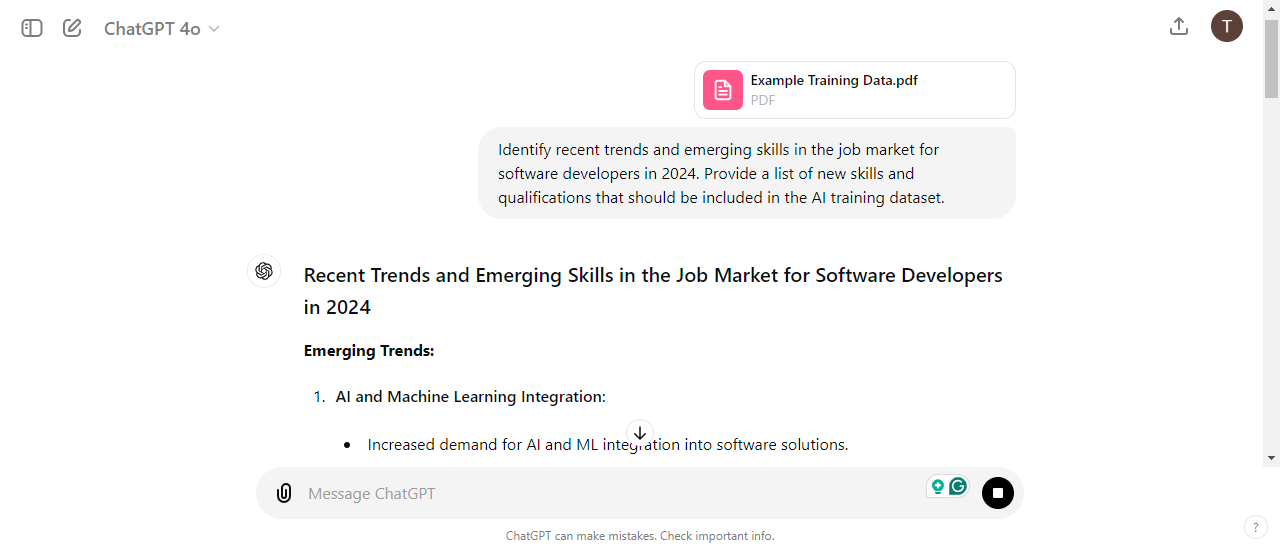

You can upload your candidate profiles as a PDF into ChatGPT to have it analyze job market trends. If you don’t have one, you can have ChatGPT assist you in making some example candidate profiles and have them represent a range of backgrounds and experiences.

Using ChatGPT-4 (included in a ChatGPT Plus subscription), upload your training data as a PDF and try the following prompt:

ChatGPT Prompt: Identify recent trends and emerging skills in the job market for software developers in 2024. Provide a list of new skills and qualifications that should be included in the AI training dataset.

Human oversight

Any significant hiring decisions should be reviewed by humans. AI should enhance and assist in your HR team’s decision-making, not replace it. Having human oversight is key in ensuring the integrity of your hiring process.

Jeffrey Zhou, CEO & Founder of Fig Loans, notes how they made their compliance strategies in using AI for recruitment, and how they include human oversight in that process.

“To ensure our AI decisions align with ethical standards and compliance requirements, we established an internal AI Ethics Board,” says Zhou. “This board is made up of diverse personnel from different departments that meet on a regular basis to assess AI-driven hiring decisions. They offer a variety of opinions, ensuring that our AI is not just compliant, but also equitable and inclusive.”

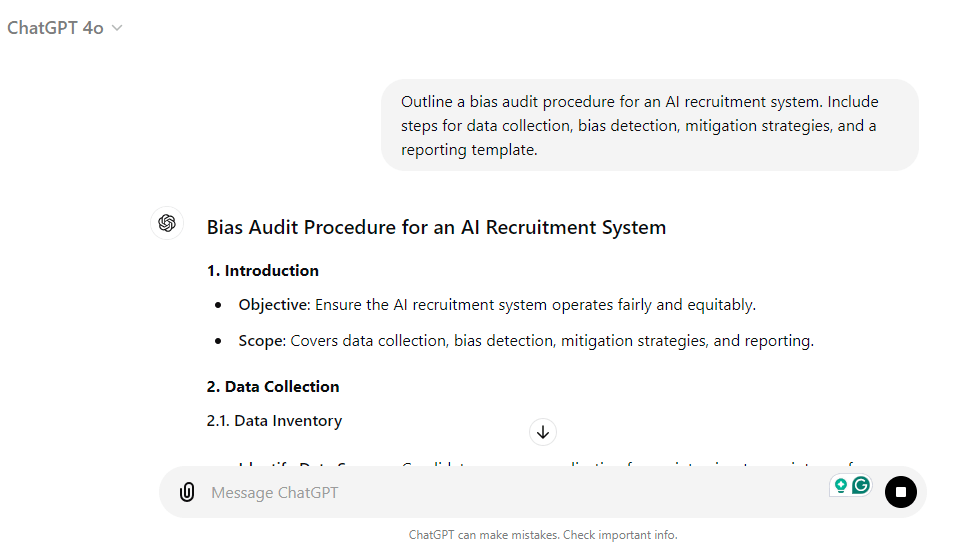

Assign specific team members to monitor AI decisions and ensure compliance with ethical standards. You can even have AI create a biased audit for the AI oversight team to conduct periodically.

ChatGPT Prompt: Outline a bias audit procedure for an AI recruitment system. Include steps for data collection, bias detection, mitigation strategies, and a reporting template.

Communicate AI decisions

Candidates can generally tell when the text they read is AI-generated, and they don’t like it. It’s a good way to damage your reputation as an employer if you don’t disclose how you use AI in the hiring process. A much better alternative is to make it clear upfront how you use AI in your hiring decisions in the job description before they apply.

Include a section in the job posting that informs candidates about the use of AI in your hiring process. That helps set clear expectations from the beginning.

ChatGPT Prompt: Draft a section for a job description that explains how AI is used in the hiring process, including the criteria it evaluates.

Training and awareness

AI is constantly changing and growing, and the way you use AI in your recruitment practices must change with it.

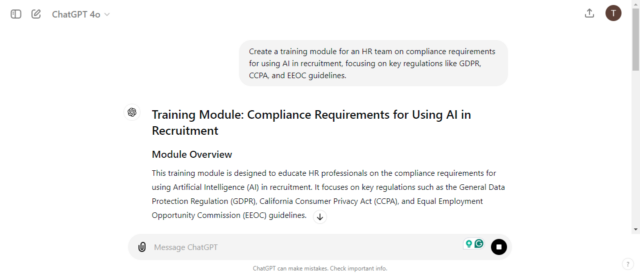

Provide ongoing education and training for your recruiting team about ethical AI use and bias mitigation techniques.

Any new HR professionals should undergo AI training during their onboarding to understand AI compliance requirements and how to effectively use these tools. Conduct regular workshops on AI ethics and bias detection every quarter. Encourage your recruiting teams to attend webinars and industry conferences to keep them informed on trends in AI and recruitment.

ChatGPT can help you outline the training modules to help keep your recruiters abreast of developments in AI.

ChatGPT Prompt: Create a training module for an HR team on compliance requirements for using AI in recruitment, focusing on key regulations like GDPR, CCPA, and EEOC guidelines.

Anonymized screening processes

Candidate evaluation should be based on skills. Implementing an anonymized candidate screening process can make your hiring decisions based on relevant skills and work history, rather than personal attributes.

When you focus solely on what matters for the role, you can make your candidate evaluations more objective and fair.

The Workable interview question generator can give you something to start with. Then, you can use AI tools to scrub any language that might include unconscious bias.

ChatGPT Prompt: Act as an HR leader. Rewrite these interview questions to focus on the candidate’s skills and qualifications to minimize the focus on personal attributes in hiring decisions. Here are the questions:

[LIST QUESTIONS BELOW]

Embrace ethical AI in recruitment

Prioritize compliance, transparency, and fairness in your AI-enhanced recruitment processes, and you and your recruitment teams can create better, more inclusive hiring practices. It not only protects you from potential legal risk, but it sets things off on the right foot with potential candidates who how you use AI responsibly and openly.

As you continue to build AI into your recruitment practices, remember to:

- Stay up-to-date on the latest regulations and laws regarding AI use like GDPR and EEOC

- Conduct quarterly and bi-annual trainings for your HR team on how to use AI

- Openly communicate with your candidates about how you use AI in the hiring process

- Regularly audit your AI usage in your hiring processes, and have dedicated team members to oversee its implementation

Workable AI offers a dedicated suite of AI tools for HR professionals and recruiters. Get started with a free trial and see how AI can make your hiring processes better and more efficient than ever.

Frequently asked questions

- What regulations govern AI in recruitment?

- Key regulations include the GDPR for data privacy and the EEOC for preventing workplace discrimination. The upcoming European Union AI Act and the U.S. AI Bill of Rights also aim to regulate AI usage, ensuring fair and ethical practices in recruitment.

- How can HR leaders ensure AI compliance?

- HR leaders should encrypt data, implement access controls, and regularly audit AI usage. They should also anonymize candidate data to reduce bias and assign team members to oversee AI compliance, ensuring ethical AI use in recruitment processes.

- Why is reducing bias important in AI recruitment?

- Reducing bias ensures fairness and compliance with regulations like the EEOC. It promotes diversity and inclusion, enhances your organization's reputation, and builds trust with candidates. Regular bias audits and diverse training datasets help achieve this.

- What are best practices for transparent AI use in recruitment?

- Be transparent about AI usage by documenting decision-making processes and implementing human oversight. Communicate AI practices in job postings to set clear expectations. Regular training on ethical AI use and bias detection is also essential.

- How can AI improve fairness in hiring?

- AI can help identify biases and ensure fair candidate evaluations by focusing on skills and qualifications. Anonymized screening processes and regular updates to AI models with diverse datasets promote equity and inclusion in hiring practices.