How we developed Auto-Suggest: the data science behind our new automated talent sourcing tool

As a data scientist here at Workable, I get to work on some challenging projects. Most recently, I’ve been working closely with the Sourcing team, and leading the Data Science team, in the development of our new automated talent sourcing tool, Auto-Suggest.

Auto-Suggest is talent acquisition technology which generates a longlist of up to 200 suggested candidates for any role created in Workable. With the longlist taken care of, you can contact appropriate candidates for an opening within minutes of the position’s approval.

The automated workflow for creating the candidate longlist involves, among other steps, deep analysis of both the job description and candidate information. This is something our team has been working on for some time. In this blog post, I describe the data science techniques we use at each step in the process and how they combine to make Auto-Suggest such a powerful automated talent sourcing tool.

Understanding keyword extraction

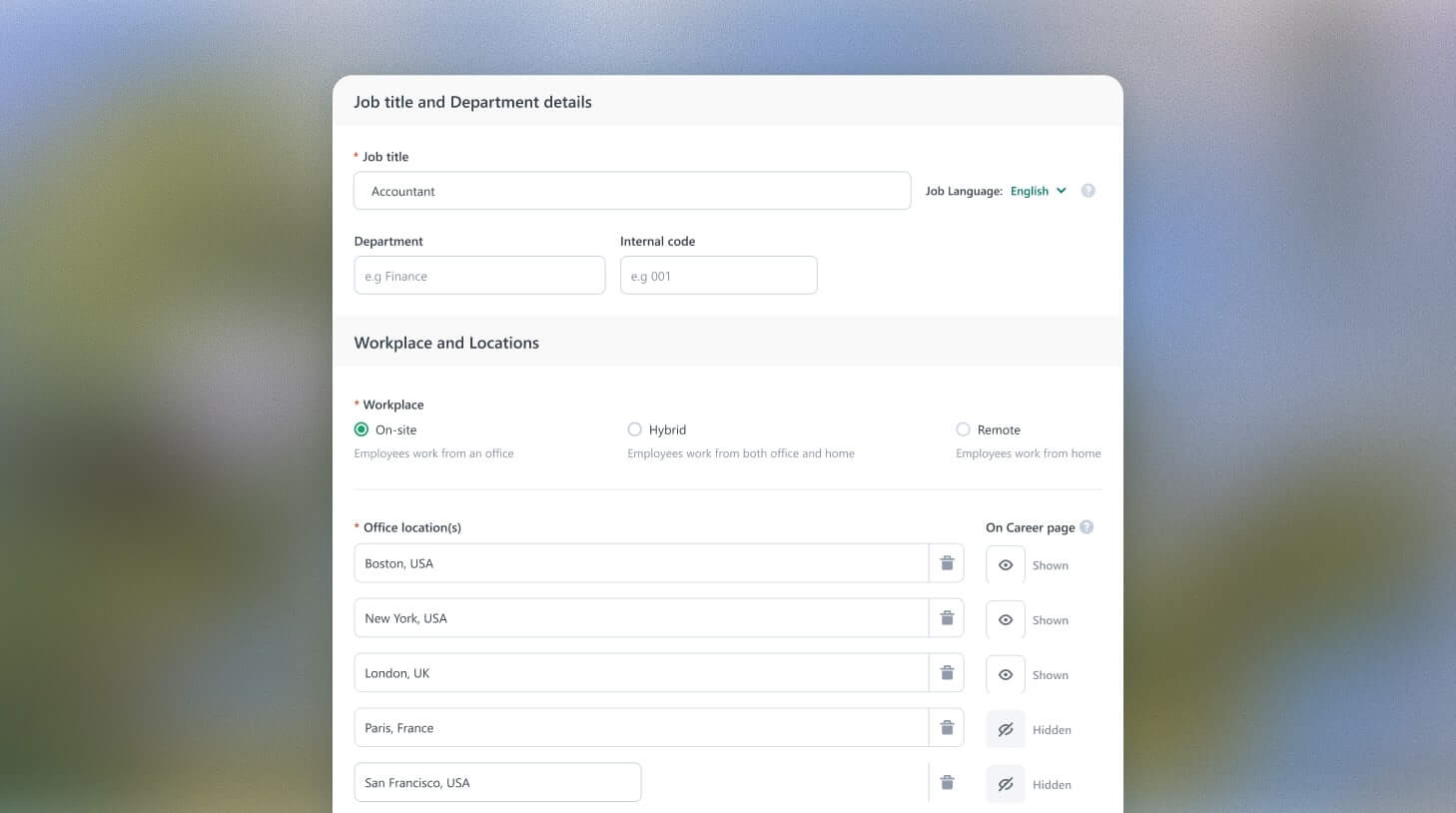

The keyword or keyphrase extraction service is responsible for generating the summary of a job posting. It does this by capturing the most descriptive words or phrases within the job posting text fields (for example, title, description and requirement summary).

Usual descriptive elements of a job posting are the skills or certifications a position requires; the sanitized version of the job title, key tasks of the position and so on.

We attack the problem of keyword extraction using a supervised learning approach. More specifically, we train a binary classifier (currently an Extreme Gradient Boosting classifier) to evaluate whether a specific word or phrase is a candidate keyword or keyphrase. That means we evaluate the “keywordness” of the phrase. Having trained this classifier, we extract keywords by evaluating all words/phrases from the job posting and choosing the ones with the highest “keywordness” score.

In order to decide whether a phrase or word is relevant, the classifier makes use of the following information, among others:

- The term and document frequency of the word or phrase

- The appearance of the word or phrase in a gazetteer of known skills, job titles, education fields

- The appearance of the word or phrase within a specific HTML element

- Tendency for the word or phrase to appear in a specific domain (domain-descriptive phrases)

- The morphology of the word or phrase (for example, capitalized)

Query Terms (QuTe) and the semantic interpretation of data

The purpose of the Query Terms (QuTe) module is to provide a semantic interpretation of the data ‘living’ in our database. Following the paradigm of well-known embedding techniques [1][2], we represent each term with a real-valued vector and we train these vectors to attain meaningful values.

Our basic assumption is that data bound to a single entity (candidate or job) are relevant to one another and thus their representations should be similar. Starting with random initial vectors we iteratively optimize these representations seeking to maximize the co-occurrence probability of relevant terms. Clusters of semantically similar terms begin to appear after only a few passes over the training data (epochs).

The four semantic categories we focus on are job titles, fields of study, candidate skills and job keywords. We support multi-word embeddings which expose relationships analogous to the original Word2Vec paper [1]. For example, the skill ‘scikit-learn’ is clustered with other similar Python libraries such as ‘scipy’ and ‘matplotlib’. Similarly, the job title ‘machine learning engineer’ is placed close to semantically relevant job titles such as ‘data science engineer’, ‘data scientist’ or ‘machine learning scientist’.

Crafting complex Boolean queries with Query Builder (QuBe)

Using information from previous components in the pipeline, the Query Builder (QuBe) module generates an appropriate Boolean search query. This query is used to retrieve candidates directly from the web. In short, to increase recall we expand the original job description (title and keywords) using QuTe’s similar terms list. Then we use QuBe to search for candidate profiles among a large number of data providers and search engines. This component handles the tradeoff between the size of the response (number of returned profiles) and their quality in terms of relevance to the job.

Identifying relevant candidates with Matcher

Behind Auto-Suggest is a multi-step process which accumulates noise from all the individual components. To mitigate this we’ve built the Matcher—a classification mechanism which kicks in at the final step of the pipeline. The Matcher’s responsibility is to predict whether a candidate is a good fit for a job. Using signals from candidate profiles and job descriptions the Matcher identifies relevant candidates for a position.

At first, we transform the job / candidate pair into their corresponding vector representations. For each candidate we keep only their skills, work experience and education entries. The vector representation is the concatenation of the corresponding elements:

- A candidate skills vector is computed from the embeddings of the candidate’s skills.

- A candidate work experience vector results from the embeddings of the job titles, taking into account job duration and recency.

- A candidate education vector is derived from the embeddings of candidate’s field of studies.

Similarly, to compute a job description’s vector we combine the embedding of the job title and the keywords’ embeddings. Both the job and candidate vectors are then fed as input to the matcher.

We view the matching process as a binary classification problem and we employ negative sampling [1][3] techniques to build our training / evaluation datasets. A job / candidate pair is considered positive if a candidate applied for the job and recruiters marked the application as acceptable inside Workable. On the other hand, negative samples are built artificially by randomly selecting candidate profiles from the database. Our current implementation follows a stacking classifier architecture where the base estimators are a collection of neural networks and Gradient Boosted Decision Trees.

This blog post was written by Vasilis Vassalos and the Data Science team.

Vasilis is the Chief Data Scientist at Workable. He has a PhD in Computer Science from Stanford University and is a Professor of Informatics at the Athens University of Economics and Business.